Supervised vs Unsupervised Learning: Key Differences Explained

What Is Machine Learning:

Before learning Supervised vs Unsupervised Learning first dived into the world of machine learning, I found it fascinating how it serves as a branch of artificial intelligence (AI) and computer science. It’s all about using data and algorithms to help AI imitate the way humans learn. This method aims to improve the accuracy of predictions over time. According to insights from UC Berkeley, the heart of this learning process involves three parts: a decision process, an error function, and model optimization. The algorithm uses input data, which can be labeled or unlabeled, to make a prediction and classification. Supervised vs Unsupervised Learning are two main types

It evaluates the outcome by comparing it with known examples, using the error function to assess any discrepancy. To enhance accuracy, it adjusts weights to better fit the data points in the training set. This is done through an iterative “evaluate and optimize” process, where the algorithm works autonomously until it meets a certain threshold. I remember the first time I saw this in action—it was like watching a model learning to walk before it could run. The magic lies in how it optimizes its pattern recognition without direct intervention, making it both a complex and thrilling field to explore.

What is Supervised learning?

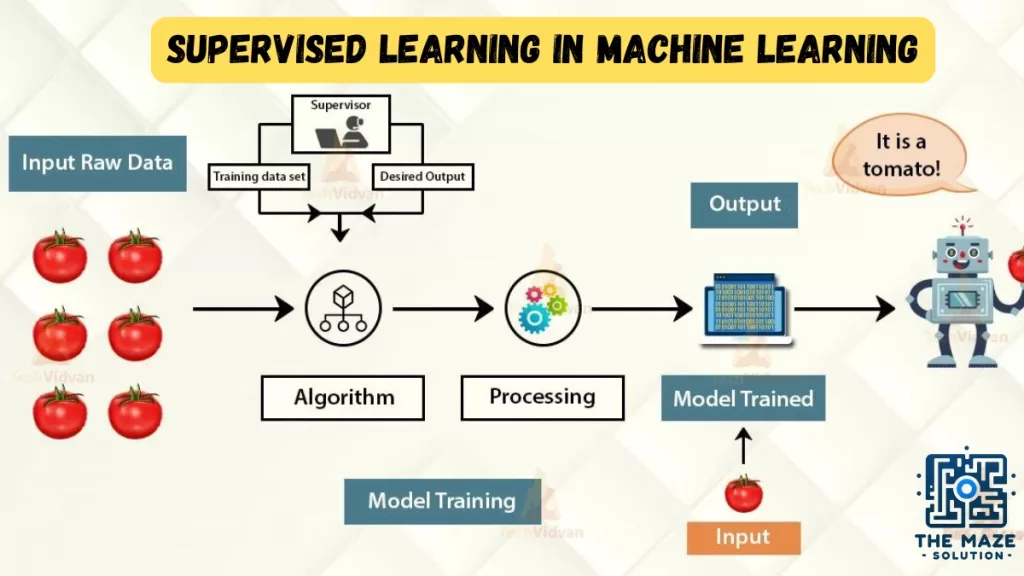

Understanding Supervised Learning

When I first encountered supervised learning, I imagined it like having a teacher guiding the machine through a set of lessons. This type of learning uses labeled data, where each piece of information is tagged with the correct answer. For instance, if you have a dataset of images containing an Elephant, a Camel, and a Cow, each image would be marked accordingly. This approach helps the algorithm to learn how to classify new information by analyzing these examples. The presence of a supervisor ensures the machine is trained with data that is well-labelled, allowing it to produce accurate outcomes.

In contrast, unsupervised learning operates without this guidance, which is one of the key differences between the two. While supervised learning focuses on feeding labeled data to the machine to teach it the correct classification, it also requires the algorithm to continuously analyze and refine its process through training. This means that the machine learns to identify patterns and make predictions by understanding the dataset. By having a structured approach, supervised learning creates a reliable framework for achieving precise outcomes.

Key Points

- Supervised learning is like sorting a basket of fruit using training data.

- Each fruit is labeled, helping the machine identify and analyze its features.

- The machine learns to extract details like shape, color, and texture.

- It can then compare these details to make predictions on new data.

Examples

Imagine you have an image showing a fruit. The machine examines its shape—perhaps rounded with a slight depression at the top. If it’s red, it might be labeled as an Apple. If the object is long and curving like a cylinder, and Green-Yellow, it’s likely a Banana. By learning from these examples, the machine can predict and confirm whether a new fruit fits a specific category. This method allows it to handle various kinds of objects effectively, using test data to improve its accuracy.

Types of Supervised Machine Learning

Regression

In the world of supervised learning, regression is a powerful tool used to predict continuous values. Imagine trying to estimate house prices or stock values. Here, the output variable is a real value, like dollars or weight. The algorithm learns a function that maps from the input features to the output value. Some popular regression algorithms include linear regression, polynomial regression, and support vector machine (SVM) regression. These methods help in understanding how different features affect the output, allowing us to make informed predictions.

Classification

On the other hand, classification deals with predicting categorical outcomes. This is where the output variable is a category, such as red or blue, or whether an email is spam or not. The algorithm learns to map input features to a probability distribution over different classes. For instance, it might predict if a medical image shows a tumor or not. Common classification algorithms include logistic regression, support vector machines, decision trees, random forests, and naive bayes. These tools help in sorting data into distinct categories, making it easier to understand and act upon.

In my experience, using supervised learning for tasks like predicting customer churn or classifying medical conditions has been incredibly insightful. The ability to work with labeled data that is already tagged with the correct answer allows the machine to learn effectively. By leveraging these algorithms, we can achieve precise results, whether it’s predicting a house price or determining if an email is spam. The key is in understanding the differences between regression and classification and applying them appropriately to solve real-world problems.

Evaluating Supervised Learning Models

Evaluating supervised learning models is an important step to make sure they are both accurate and generalizable. This process uses various metrics to ensure that the model is performing well. These metrics help in understanding how well the model can predict actual values and how close its predictions are. The key is to use metrics that align with the type of supervised learning being applied, whether it’s regression or classification.

Regression

For regression, Mean Squared Error (MSE) is often used. It measures the average of the squared errors between the predicted and actual values. A lower MSE indicates a better model. Another metric is the Root Mean Squared Error (RMSE), which is the square root of MSE and shows the standard deviation of the errors. This is useful for understanding how much the predictions deviate from the actual values. Mean Absolute Error (MAE) gives another view by measuring the average absolute differences, being less sensitive to outliers compared to MSE and RMSE. Lastly, R-squared or coefficient of determination shows the proportion of variance in the target variable that the model can explain. A higher R-squared value means the model fits the data better.

Classification

In classification, accuracy is a commonly used metric. It represents the percentage of predictions that are correct. However, it doesn’t always give the full picture, especially if the classes are imbalanced. That’s where precision and recall come in. Precision measures the percentage of positive predictions that are actually correct, while recall measures the percentage of all positive examples that the model correctly identifies. The F1 score is a weighted average of precision and recall, providing a balance between them. A confusion matrix can also be useful, as it is a table showing the number of predictions for each class along with the actual class labels. This helps to visualize where the model is struggling and where it performs well.

Applications of Supervised Learning

- Spam Filtering: Algorithms are trained to identify and classify emails based on their content, helping users avoid unwanted messages.

- Image Classification: Automatic categorization of images into groups like animals, objects, or scenes, enabling improved image search and product recommendations.

- Medical Diagnosis: Analysis of patient data, including medical images, test results, and history, to detect patterns that suggest specific diseases or conditions.

- Fraud Detection: Models analyze financial transactions to identify fraudulent activity, protecting institutions and customers.

- Natural Language Processing (NLP): Crucial for tasks like sentiment analysis, machine translation, and text summarization, allowing machines to better understand and process human language.

What is Unsupervised learning?

Understanding Unsupervised Learning

Unsupervised learning is a type of machine learning that works with unlabeled data. This means the data doesn’t have any pre-existing labels or categories. The goal of unsupervised learning is to discover patterns and relationships in the data without any guidance.

Key Points

- Unsupervised learning allows the model to discover patterns and relationships in unlabeled data.

- Clustering algorithms group similar data points together based on their inherent characteristics.

- Feature extraction captures essential information from the data, enabling the model to make meaningful distinctions.

- Label association assigns categories to the clusters based on the extracted patterns and characteristics.

Example

Imagine you have a machine learning model trained on a large dataset of unlabeled images, containing both dogs and cats. The model has never seen an image of a dog or cat before, and it has no pre-existing labels or categories for these animals. Your task is to use unsupervised learning to identify the dogs and cats in a new, unseen image. For instance, if the model is given an image with both dogs and cats, it has no idea about the features of dogs and cats, so it can’t categorize them as such. However, it can categorize them according to their similarities, patterns, and differences, grouping the images into parts containing dogs and parts containing cats. This is done without any training data or examples, allowing the model to work on its own to discover patterns and information that was previously undetected.

Types of Unsupervised Learning

Unsupervised learning is classified into two categories of algorithms:

Association: An association rule learning problem is where you want to discover rules that describe large portions of your data, such as people that buy X also tend to buy Y.

Clustering: A clustering problem is where you want to discover the inherent groupings in the data, such as grouping customers by purchasing behavior.

Clustering

Clustering is a problem where the goal is to discover the inherent groupings in the data, such as grouping customers by their purchasing behavior.

Association Rule Learning

Association rule learning is a problem where the goal is to discover rules that describe large portions of the data, such as people who buy X also tend to buy Y.

Clustering Algorithms

Clustering is a type of unsupervised learning used to group similar data points together. Clustering algorithms work by iteratively moving data points closer to their cluster centers and further away from data points in other clusters. Some common clustering types include:

- Exclusive (partitioning)

- Agglomerative

- Overlapping

- Probabilistic

- Hierarchical clustering

- K-means clustering

- Principal Component Analysis

- Singular Value Decomposition

- Independent Component Analysis

- Gaussian Mixture Models (GMMs)

- Density-Based Spatial Clustering of Applications with Noise (DBSCAN)

Association Rule Learning Algorithms

Association rule learning is a type of unsupervised learning used to identify patterns in data. Association rule learning algorithms work by finding relationships between different items in a dataset. Some common association rule learning algorithms include:

- Apriori Algorithm

- Eclat Algorithm

- FP-Growth Algorithm

In my experience, unsupervised learning can be a powerful tool for discovering hidden insights and patterns in data, without the need for pre-existing labels or categories. By leveraging clustering and association rule learning, we can uncover meaningful groupings and relationships that may not be immediately apparent, leading to valuable discoveries and better decision-making.

Applications of Unsupervised Learning

- Anomaly detection: Unsupervised learning can identify unusual patterns or deviations from normal behavior in data, enabling the detection of fraud, intrusion, or system failures.

- Scientific discovery: Unsupervised learning can uncover hidden relationships and patterns in scientific data, leading to new hypotheses and insights in various scientific fields.

- Recommendation systems: Unsupervised learning can identify patterns and similarities in user behavior and preferences to recommend products, movies, or music that align with their interests.

- Customer segmentation: Unsupervised learning can identify groups of customers with similar characteristics, allowing businesses to target marketing campaigns and improve customer service more effectively.

- Image analysis: Unsupervised learning can group images based on their content, facilitating tasks such as image classification, object detection, and image retrieval.

Conclusion

In conclusion, both supervised and unsupervised learning have their unique strengths and applications. Supervised learning excels at tasks like spam filtering, image classification, medical diagnosis, fraud detection, and natural language processing, where labeled data and predefined objectives are available. Its advantages include the ability to optimize performance and solve real-world problems, while its disadvantages involve the challenges of classifying big data and handling complex tasks.On the other hand, unsupervised learning shines in areas like anomaly detection, scientific discovery, recommendation systems, customer segmentation, and image analysis, where the goal is to uncover hidden patterns and relationships in unlabeled data. The advantages of unsupervised learning include its capability to find previously unknown patterns and provide insights that might not be accessible otherwise. However, it also faces challenges in measuring accuracy and evaluating performance due to the lack of predefined answers.